2 – InfiniPath Cluster Administration

Memory Footprint

IB6054601-00 D 2-3

Q

on system configuration. OpenFabrics support is under development and has not

been fully characterized. This table summarizes the guidelines.

Here is an example for a 1024 processor system:

■ 1024 cores over 256 nodes (each node has 2 sockets with dual-core processors)

■ 1 adapter per node

■ Each core runs an MPI process, with the 4 processes per node communicating

via shared memory.

■ Each core uses OpenFabrics to connect with storage and file system targets

using 50 QPs and 50 EECs per core.

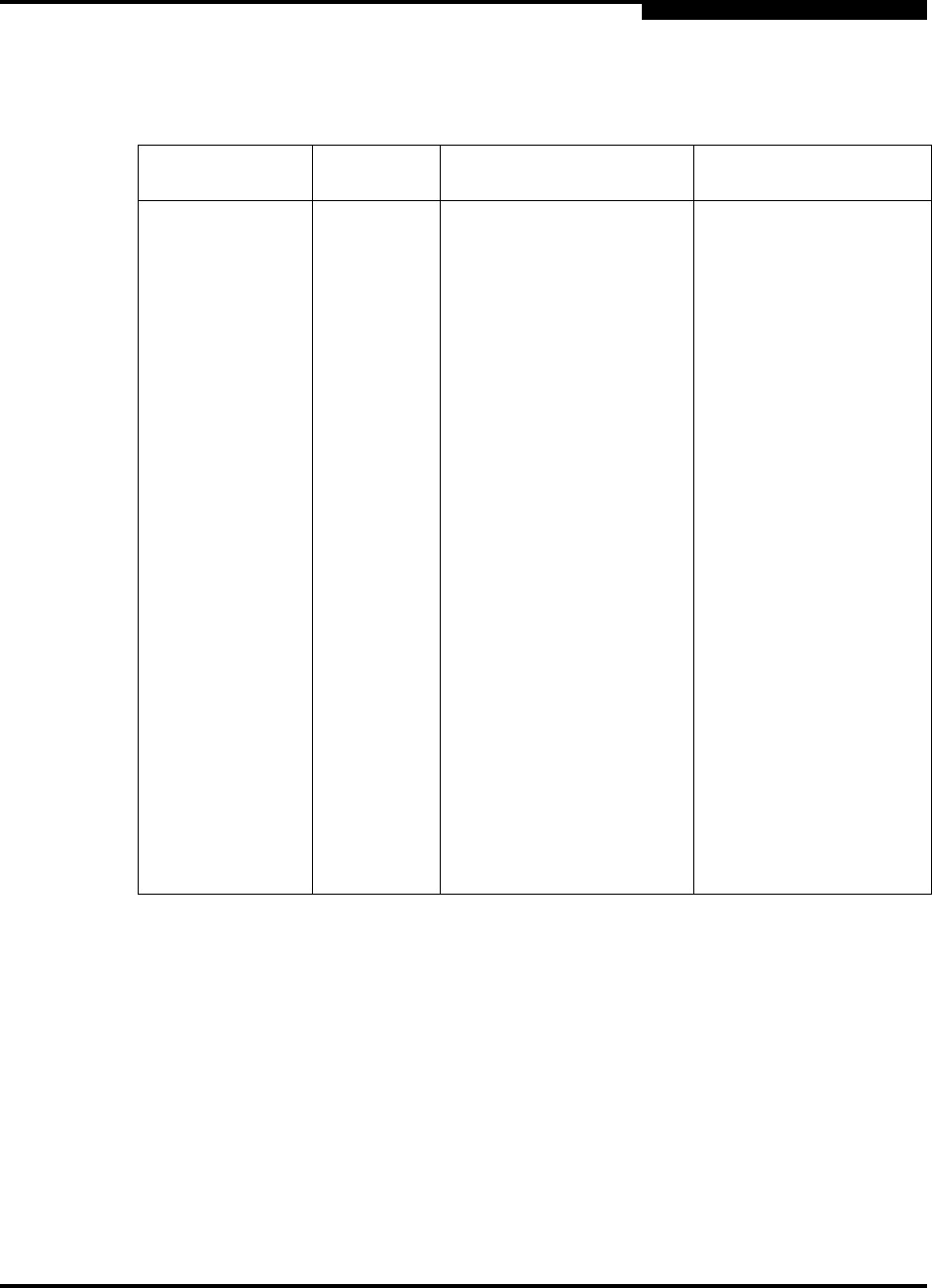

Table 2-1. Memory Footprint of the InfiniPath Adapter on Linux x86_64 Systems

Adapter

component

Required/

optional

Memory Footprint Comment

InfiniPath Driver Required 9 MB Includes accelerated IP

support. Includes tables

space to support up to

1000 node systems.

Clusters larger than 1000

nodes can also be

configured.

MPI Optional 71 MB per process with

default parameters: 60 MB

+ 512

*2172 (sendbufs) +

4096*2176 (recvbufs) +

1024*1K (misc. allocations)

+ 32 MB per node when

multiple processes

communicate via shared

memory

+ 264 Bytes per MPI node

on the subnet

Several of these

parameters (sendbufs,

recvbufs and size of the

shared memory region)

are tunable if reduced

memory footprint is

desired.

OpenFabrics Optional 1~6 MB

+ ~500 bytes per QP

+ TBD bytes per MR

+ ~500 bytes per EE

Context

+ OpenFabrics stack from

openfabrics.org (size not

included in these

guidelines)

This not been fully

characterized as of this

writing.