Drive procedures 81

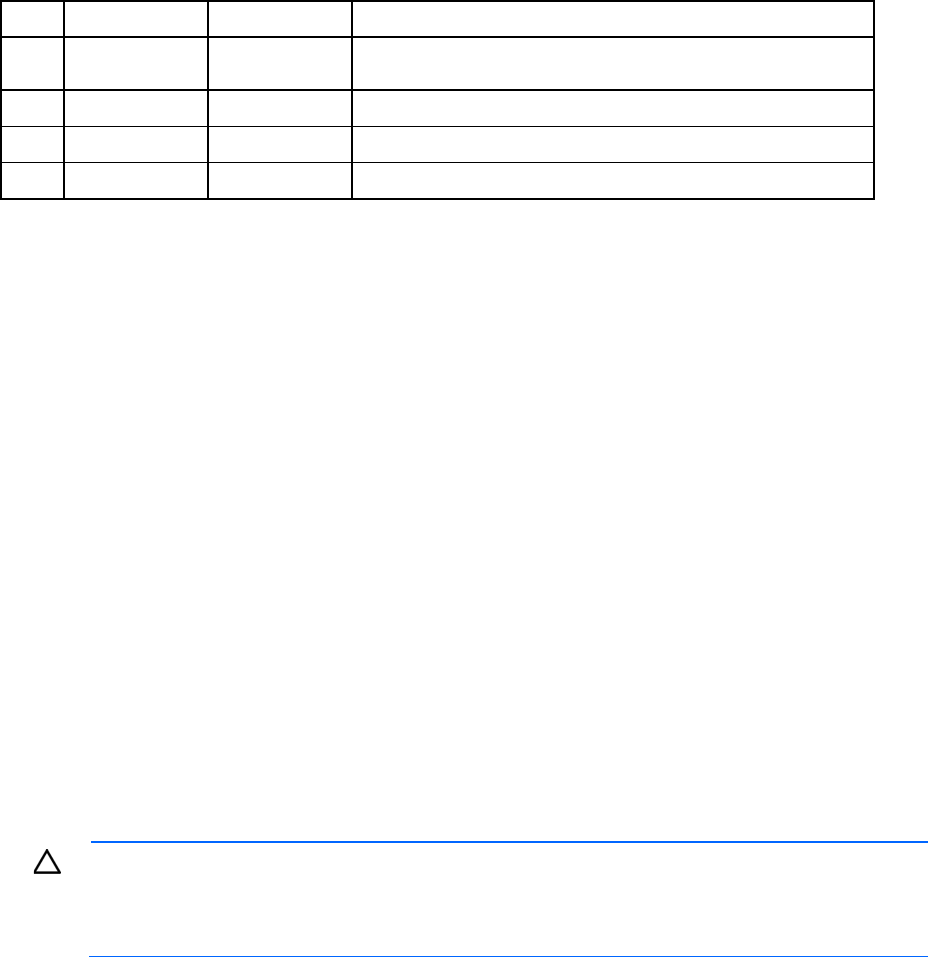

Item LED Status Definition

Flashing

amber/green

The drive is a member of one or more logical drives and predicts

the drive will fail.

Flashing amber The drive is not configured and predicts the drive will fail.

Solid amber The drive has failed.

Off The drive is not configured by a RAID controller.

The blue Locate LED is behind the release lever and is visible when illuminated.

Recognizing drive failure

If any of the following occurs, the drive has failed:

• The fault LED illuminates.

• When failed drives are located inside the server or storage system and the drive LEDs are not visible, the

amber LED on the front of the server or storage system illuminates. This LED also illuminates when other

problems occur such as when a fan fails, a redundant power supply fails, or the system overheats.

• A POST message lists failed drives when the system is restarted, as long as the controller detects at least

one functional drive.

• ACU represents failed drives with a distinctive icon.

• HP Systems Insight Manager can detect failed drives remotely across a network. For more information

about HP Systems Insight Manager, see the documentation on the Management CD.

• The HP System Management Homepage (SMH) indicates that a drive has failed.

• The Event Notification Service posts an event to the server IML and the Microsoft® Windows® system

event log.

• ADU lists all failed drives.

For additional information about diagnosing drive problems, see the HP Servers Troubleshooting Guide.

CAUTION: Sometimes, a drive that has previously been failed by the controller may seem to be

operational after the system is power-cycled or (for a hot-pluggable drive) after the drive has been

removed and reinserted. However, continued use of such marginal drives may eventually result in

data loss. Replace the marginal drive as soon as possible.

Effects of a drive failure

When a drive fails, all logical drives that are in the same array are affected. Each logical drive in an array

might be using a different fault-tolerance method, so each logical drive can be affected differently.

• RAID 0 configurations cannot tolerate drive failure. If any physical drive in the array fails, all RAID 0

logical drives in the same array also fail.

• RAID 1+0 configurations can tolerate multiple drive failures if no failed drives are mirrored to one

another.

• RAID 5 configurations can tolerate one drive failure.

• RAID 50 configurations can tolerate one failed drive in each parity group.