Vol. 3A 8-9

ADVANCED PROGRAMMABLE INTERRUPT CONTROLLER (APIC)

8.4.2 Presence of the Local APIC

Beginning with the P6 family processors, the presence or absence of an on-chip local APIC can

be detected using the CPUID instruction. When the CPUID instruction is executed with a source

operand of 1 in the EAX register, bit 9 of the CPUID feature flags returned in the EDX register

indicates the presence (set) or absence (clear) of a local APIC.

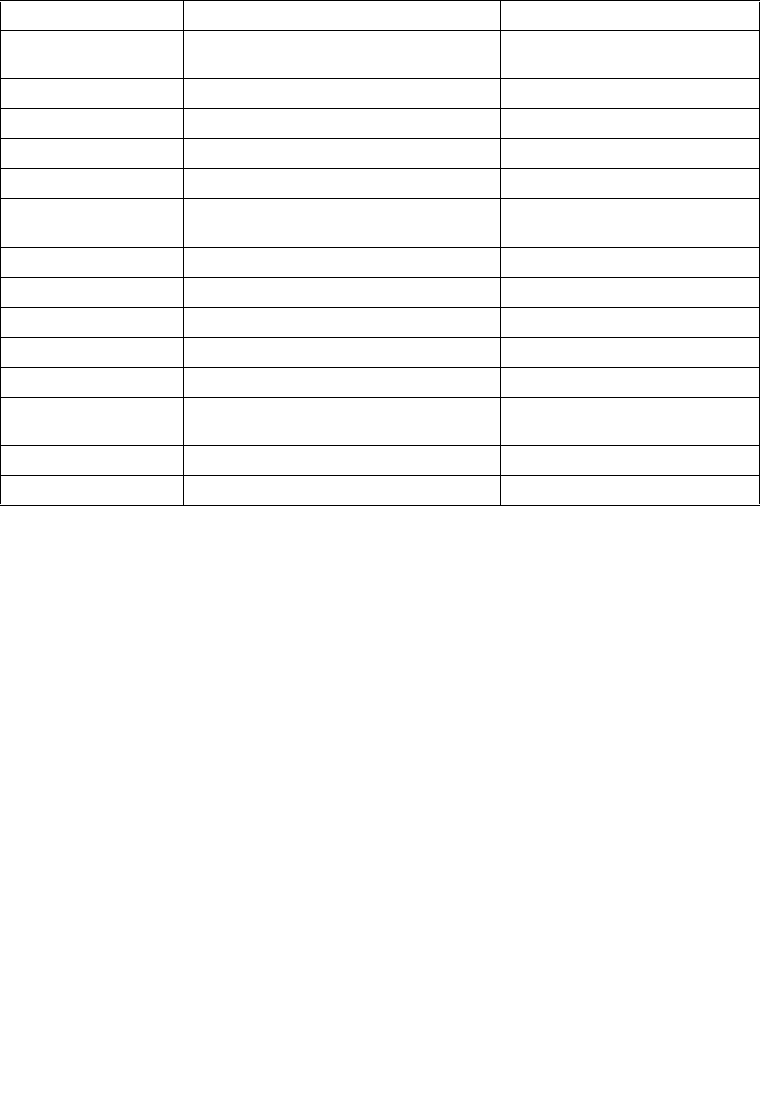

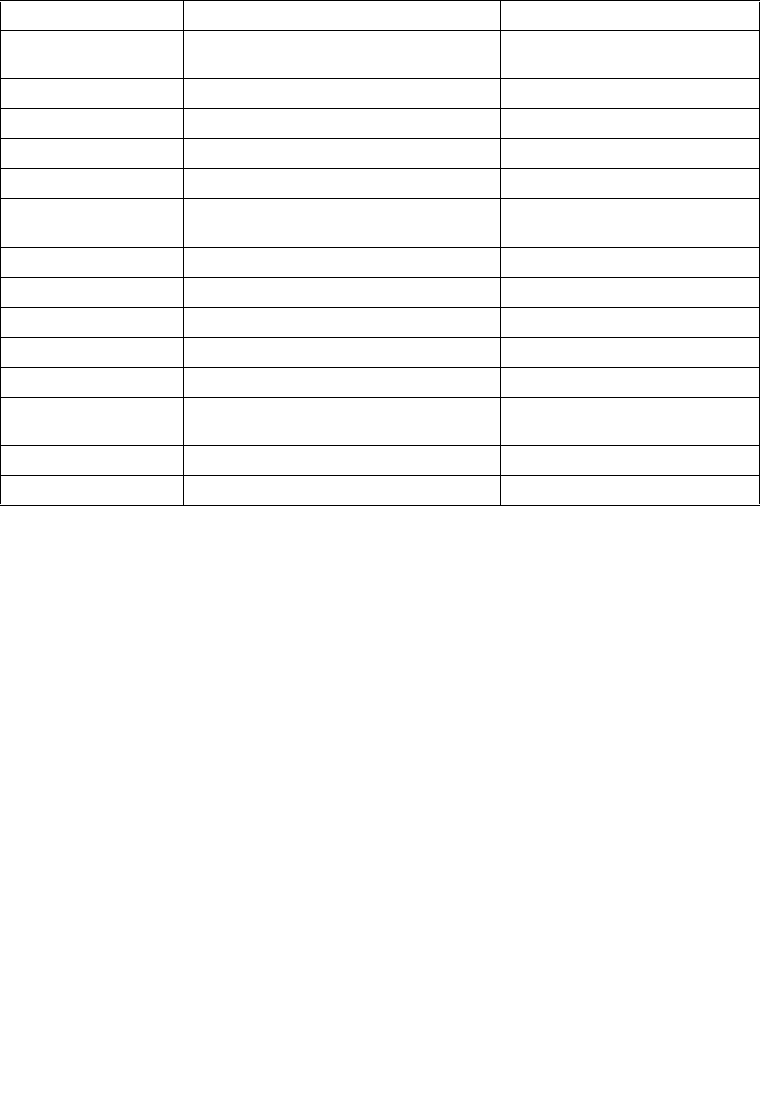

FEE0 0290H through

FEE0 02F0H

Reserved

FEE0 0300H Interrupt Command Register (ICR) [0-31] Read/Write.

FEE0 0310H Interrupt Command Register (ICR) [32-63] Read/Write.

FEE0 0320H LVT Timer Register Read/Write.

FEE0 0330H LVT Thermal Sensor Register

2

Read/Write.

FEE0 0340H LVT Performance Monitoring Counters

Register

3

Read/Write.

FEE0 0350H LVT LINT0 Register Read/Write.

FEE0 0360H LVT LINT1 Register Read/Write.

FEE0 0370H LVT Error Register Read/Write.

FEE0 0380H Initial Count Register (for Timer) Read/Write.

FEE0 0390H Current Count Register (for Timer) Read Only.

FEE0 03A0H through

FEE0 03D0H

Reserved

FEE0 03E0H Divide Configuration Register (for Timer) Read/Write.

FEE0 03F0H Reserved

NOTES:

1. Not supported in the Pentium 4 and Intel Xeon processors.

2. Introduced in the Pentium 4 and Intel Xeon processors. This APIC register and its associated function

are implementation dependent and may not be present in future IA-32 processors.

3. Introduced in the Pentium Pro processor. This APIC register and its associated function are implemen-

tation dependent and may not be present in future IA-32 processors.

Table 8-1. Local APIC Register Address Map (Contd.)

Address Register Name Software Read/Write