5.2.5 Scheduling

Scheduling is one of the features that is highly improved in the SLES 2.6 kernel over the 2.4 kernel. It uses a

new scheduler algorithm, called the O (1) algorithm, that provides greatly increased scheduling scalability.

The O (1) algorithm achieves this by taking care that the time taken to choose a process for placing into

execution is constant, regardless of the number of processes. The new scheduler scales well, regardless of

process count or processor count, and imposes a low overhead on the system.

In the Linux 2.6 scheduler, time to select the best task and get it on a processor is constant, regardless of the

load on the system or the number of CPUs for which it is scheduling.

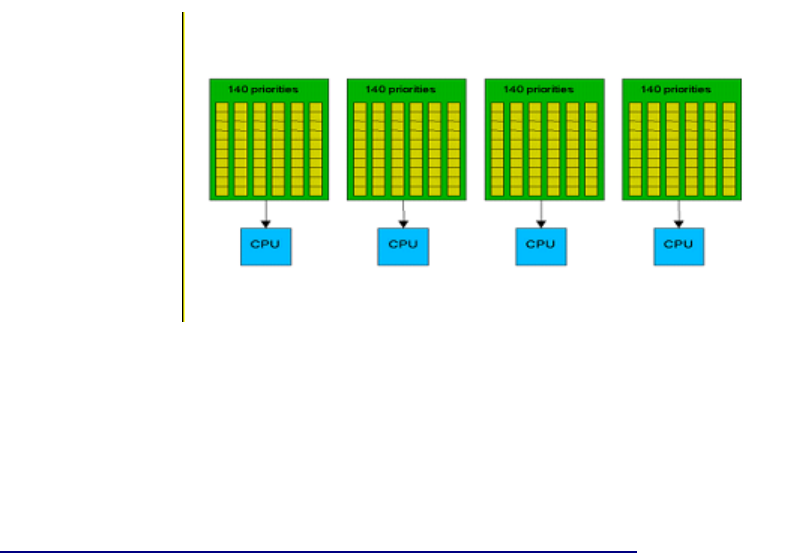

Instead of one queue for the whole system, one active queue is created for each of the 140 possible priorities

for each CPU. As tasks gain or lose priority, they are dropped into the appropriate queue on the processor on

which they last ran. Now it is easy for a processor to find the highest priority task. As tasks complete their

time slices, they go into a set of 140 parallel queues, named the expired queues, per processor. When the

active queue is empty, a simple pointer assignment can cause the expired queue to become the active queue

again, making turnaround quite efficient.

For more information about O(1) scheduling, refer to Linux Kernel Development - A Practical guide to the

design and implementation of the Linux Kernel, Chapter 3, by Robert Love, or “Towards Linux 2.6: A look

into the workings of the next new kernel” by Anand K. Santhanam at

http://www-106.ibm.com/developerworks/linux/library/l-inside.html#h1.

The SLES kernel also provides support for hyperthreaded CPUs that improves hyperthreaded CPU

performance. Hyperthreading is a technique in which a single physical processor masquerades at the

hardware level as two or more processors. It enables multi-threaded server software applications to execute

threads in parallel within each individual server processor, thereby improving transaction rates and response

times.

Hyperthreading scheduler

This section describes scheduler support for hyperthreaded CPUs. Hyperthreading support ensures that the

scheduler can distinguish between physical CPUs and logical, or hyperthreaded, CPUs. Scheduler compute

queues are implemented for each physical CPU, rather than each logical CPU as was previously the case.

This results in processes being evenly spread across physical CPUs, thereby maximizing utilization of

resources such as CPU caches and instruction buffers.

59

Figure 5-13: O(1) scheduling