28 DS6000 Series: Concepts and Architecture

is not feasible in real-life systems), SARC uses prefetching for sequential workloads.

Sequential access patterns naturally arise in video-on-demand, database scans, copy,

backup, and recovery. The goal of sequential prefetching is to detect sequential access and

effectively pre-load the cache with data so as to minimize cache misses.

For prefetching, the cache management uses tracks. To detect a sequential access pattern,

counters are maintained with every track, to record if a track has been accessed together with

its predecessor. Sequential prefetching becomes active only when these counters suggest a

sequential access pattern. In this manner, the DS6000/DS8000 monitors application read-I/O

patterns and dynamically determines whether it is optimal to stage into cache one of the

following:

Just the page requested.

That page requested plus remaining data on the disk track.

An entire disk track (or a set of disk tracks) which has (have) not yet been requested.

The decision of when and what to prefetch is essentially made on a per-application basis

(rather than a system-wide basis) to be sensitive to the different data reference patterns of

different applications that can be running concurrently.

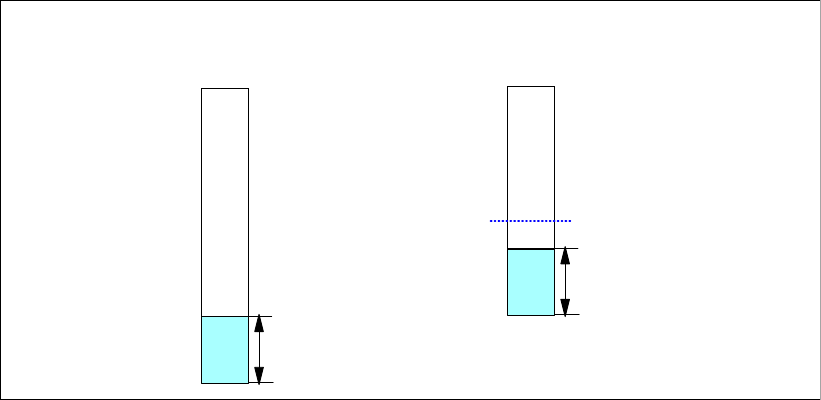

To decide which pages are evicted when the cache is full, sequential and random

(non-sequential) data is separated into different lists (see Figure 2-5). A page which has been

brought into the cache by simple demand paging is added to the MRU (Most Recently Used)

head of the RANDOM list. Without further I/O access, it goes down to the LRU (Least

Recently Used) bottom. A page which has been brought into the cache by a sequential

access or by sequential prefetching is added to the MRU head of the SEQ list and then goes

in that list. Additional rules control the migration of pages between the lists to not keep the

same pages twice in memory.

Figure 2-5 Cache lists of the SARC algorithm for random and sequential data

To follow workload changes, the algorithm trades cache space between the RANDOM and

SEQ lists dynamically and adaptively. This makes SARC scan-resistant, so that one-time

sequential requests do not pollute the whole cache. SARC maintains a desired size

parameter for the sequential list. The desired size is continually adapted in response to the

workload. Specifically, if the bottom portion of the SEQ list is found to be more valuable than

the bottom portion of the RANDOM list, then the desired size is increased; otherwise, the

desired size is decreased. The constant adaptation strives to make the optimal use of limited

RANDOM

LRU

MRU

RANDOM bottom

SEQ

LRU

MRU

SEQ bottom

Desired size